Most schools carry out KS2 test practice at several points across year 6. Pupils will attempt previous years’ papers in reading, maths, and grammar, punctuation and spelling; and the results of those tests will typically be entered on to a spreadsheet or an assessment data tracking system such as Insight. Of course, the pass mark for expected standards is a scaled score of 100 – and the high score threshold is 110 – and schools will generally adopt those thresholds as indicators of performance on the practice tests. The problem is, the earlier in the year the pupil takes a KS2 test, the less likely they are to achieve expected standards. So, can we be a bit smarter and use a different set of thresholds as predictors of final outcomes in the KS2 tests?

First, we’re going to need some matched data from previous years. This means a dataset of pupils that have results of both practice and final KS2 tests. Then we need to plot the results of one against the other using a scatter plot. This can easily be done in Excel, but if you use Insight then you already have the tool for the job, and you won’t need to export any data.

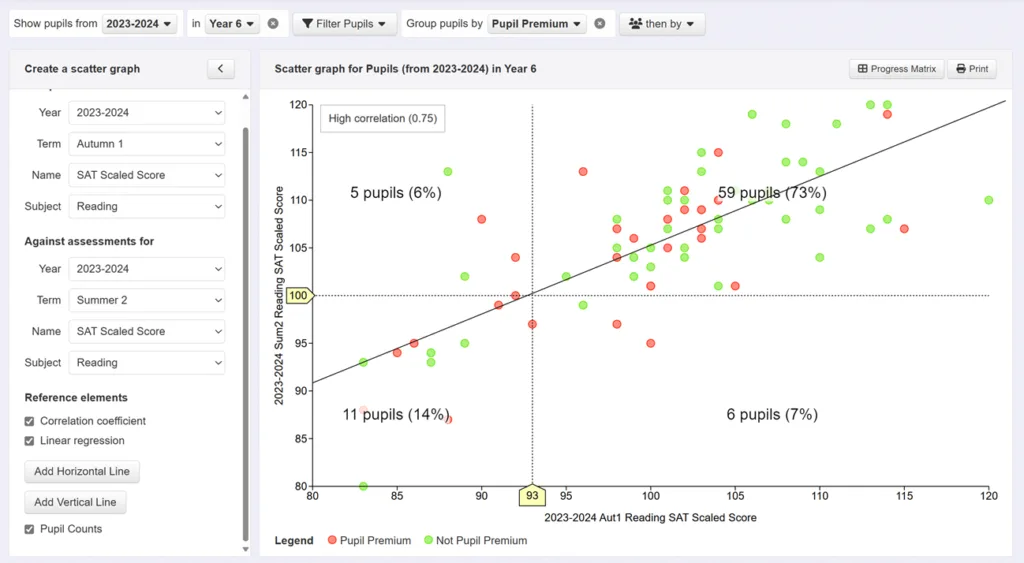

In the following example, the results of a practice test in reading taken in Autumn 1 (first half of the autumn term) of the 2023/24 academic year (x-axis) have been plotted against last summer’s KS2 results in the same subject (y-axis).

A correlation coefficient is calculated, which in this case, at 0.75, is deemed high. This suggests that the first test could be a reliable predictor of the second. A linear regression line – a line of best fit – is then plotted through the data, and we can see there is a fair amount of clustering around the line. Next, a horizontal reference line is added and set at a score of 100 on the y-axis. All pupils on or above the horizontal reference line met the expected standard in reading. Finally, a vertical reference line is added and dragged so that it intersects with the other two lines on the graph. The point at which it intersects corresponds to a scaled score of 93 on the x-axis. By clicking the ‘pupil counts’ option, we can see that 73% of pupils are in the top right quadrant. These pupils achieved a score of 93 or above in the autumn practice test and scored 100 or more in the final KS2 test. Of the 65 pupils that achieved a score of 93 or more, 59 of them (91%) achieved the expected standard at KS2. Only five pupils that achieved a score below 93 on the practice test achieved the expected standard at KS2. A score of 93 or above – let’s call this the indicator score – achieved on a reading test taken in the autumn term is therefore a good predictor of expected standards the following summer.

This is, however, based on one cohort of pupils taking one particular test. Conducting the same analysis using scores derived from a different test – where the 2019 rather than the 2018 papers were used for practice, for example – may produce different results. It is also important to note that if we carried out the same analysis using scores from tests taken the following term, we are likely to see the indicator score increase and once we get to Easter, we will, of course, be expecting pupils to achieve a score of 100 or more.

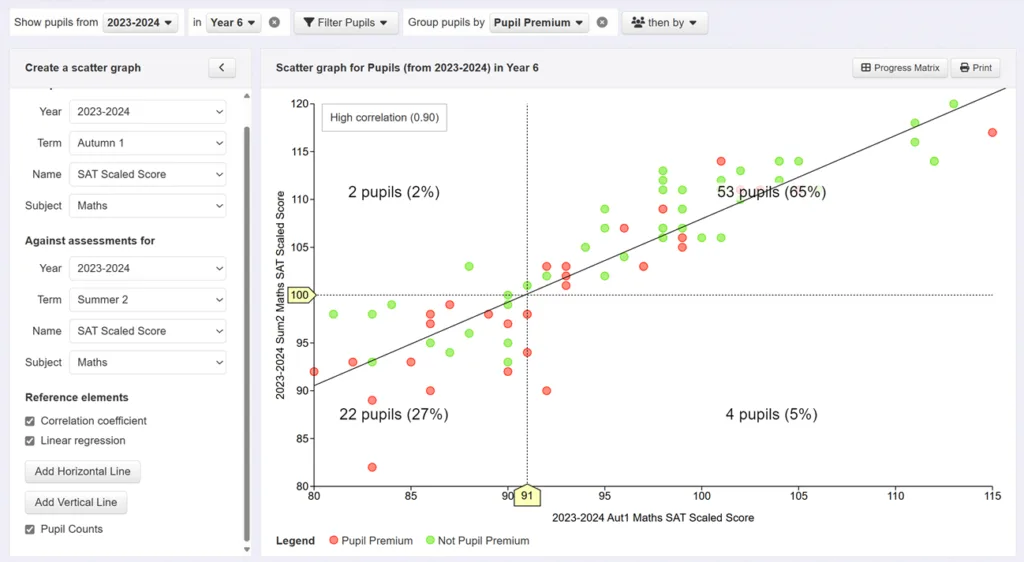

Here’s the same approach applied to the results of maths’ tests taken at the same two points in time.

Here the correlation is even higher, at 0.90, and a score of 91 appears to be a strong indicator that the pupil will achieve the expected standard at KS2. Of the 57 pupils that achieved a score of 91 or more on the first test, 53 (93%) achieved the expected standard at the end of the year. Only two pupils that achieved a score below 91 went on to achieve expected standards at KS2. Again, if we undertake the same process later in the academic year then we can expect the indicator score to increase.

Using standardised tests in place of KS2 practice tests

If your school uses standardised tests, this same approach can be used by replacing the scaled scores from the practice tests with standardised scores. For example, we could plot the standardised scores from an NFER maths test taken in the summer of year 5 against the scaled scores that the same cohort achieve at KS2 a year later. Scaled scores and standardised scores are fundamentally different, but they can still be compared and used in the way outlined above. This means that you can use tests taken in year 4 or year 5 to predict KS2 outcomes.

Note: When using scaled scores from practice KS2 tests, we will expect the indicator score to increase each term. However, when using standardised scores, we will expect the indicator score to remain relatively stable over time. This is because a standardised score describes a pupil’s position in the population rather than their distance from an expected standard. If we can establish that a particular position in the population is a strong predictor of expected standards, then we should be able to adopt that as a consistent indicator over time. If using standardised tests, it is recommended that you carry out the analysis from several points in time and take an average of the indicator scores. In Insight, we have set the indicator score at a standardised score of 95 or above by default – which represents the top 63% of pupils nationally – but this can be changed if your analysis reveals an indicator score that you consider more reliable.

Summary

Scores from one test can be used as predictors of results at KS2 by plotting the data on a scatter graph and following the method described here to establish indicator scores. The examples above suggest that scaled scores of 93 (in reading) and 91 (in maths) achieved in KS2 practice tests taken in term 1 are strong indicators of reaching expected standards in the final KS2 tests taken in May. Where the start point is a KS2 practice test, we will expect the indicator score to increase over time until a score of 100 becomes the expectation. When based on a norm-referenced standardised test, however, we can expect the indicator score to remain fairly stable. The more data involved, the more reliable the indicator scores will be, and we need to consider that different years’ KS2 tests may yield different outcomes. Ideally, therefore, it is worth carrying out analysis for a number of cohorts using a variety of test results. To this aim, Multi-Academy Trusts can harness the power of their large datasets, and Insight’s MAT-level scatter plot tool is ideal for this purpose.

Please get in touch if you’d like any further information on this method, and you can join us live at one of our DISCO events where you can find out how to get the most from your data.

Leave a Reply